I’m learning how to run an R workflow on a supercomputer. I’ve not come across supercomputers before and didn’t know how and where to start learning how to use it. It’s been quite the journey and I thought I’d compile this list of resources so anyone else who wants to learn how to use supercomputers can have a friendly guide to get them started.

Before I dive into it, I would like to distinguish between using supercomputers and high-performance computing (HPC). These terms (“supercomputing” and “HPC”) are often used interchangeably, as you will see me do in this post, but there is a distinction here in the context of advanced computing. Supercomputers are only a subset of HPCs. HPC is a broader term that encompasses the use of supercomputers and other powerful computing resources to solve advanced computational problems.

The following is an opinionated list of what I believe to be the most important concepts to learn when you’re first starting out with HPC. Of course, you can run programs on high-performance computers without necessarily needing to understand the following concepts, especially if you’ve been given pre-written scripts to run your programs. However, I still think it’s helpful to understand the following concepts if you’d like to optimise your HPC usage.

HPC concepts

Here are the concepts that I found most important.

- Basic skills in using the Linux command line and a text editor such as Vim.

- Understanding the HPC components such as login nodes, compute nodes, and data nodes.

- Understanding the job scheduling system, such as Slurm.

- A basic understanding of computer architecture.

- A basic understanding of networking concepts.

Let’s break them down one by one.

Linux command line

A basic understanding of how to use the command line is useful as you’ll mostly be using the command line when accessing HPCs. When you’re on the command line, you also want to be able to use text editors like Vim or Nano to edit scripts and configuration files.

Resources

- The Linux Command Line by William Shotts (freely available here) is a textbook I really enjoyed. It gives an accessible and friendly explanation of what’s going on. See also my list of command line tips and resources.

- Unix and Linux: Visual QuickStart Guide (4e) by Deborah Ray and Eric Ray (Google Books) is more comprehensive than The Linux Command Line. It describes the whole Unix system, which I found really useful. Pick this up after you feel more comfortable with the command line.

Text editor skills

My preferred text editor is Vim. Here are the resources I found most helpful in learning how to use Vim:

- A quick introduction to what’s going on when you really have no clue: this guide from the Linux Handbook.

- An interactive tutorial for learning Vim: OpenVim. I liked this because it allows you to actually see what happens on the screen as you’re typing things out. I preferred this over the game Vim Adventures as 1) you can clearly see the progression of what you’re learning; and 2) it’s free.

- Otherwise you could try out the built-in

vimtutorby typing invimtutorin your terminal (assuming Vim is already installed on your device). - For information on how to do more advanced things in Vim, this guide is pretty good.

HPC components

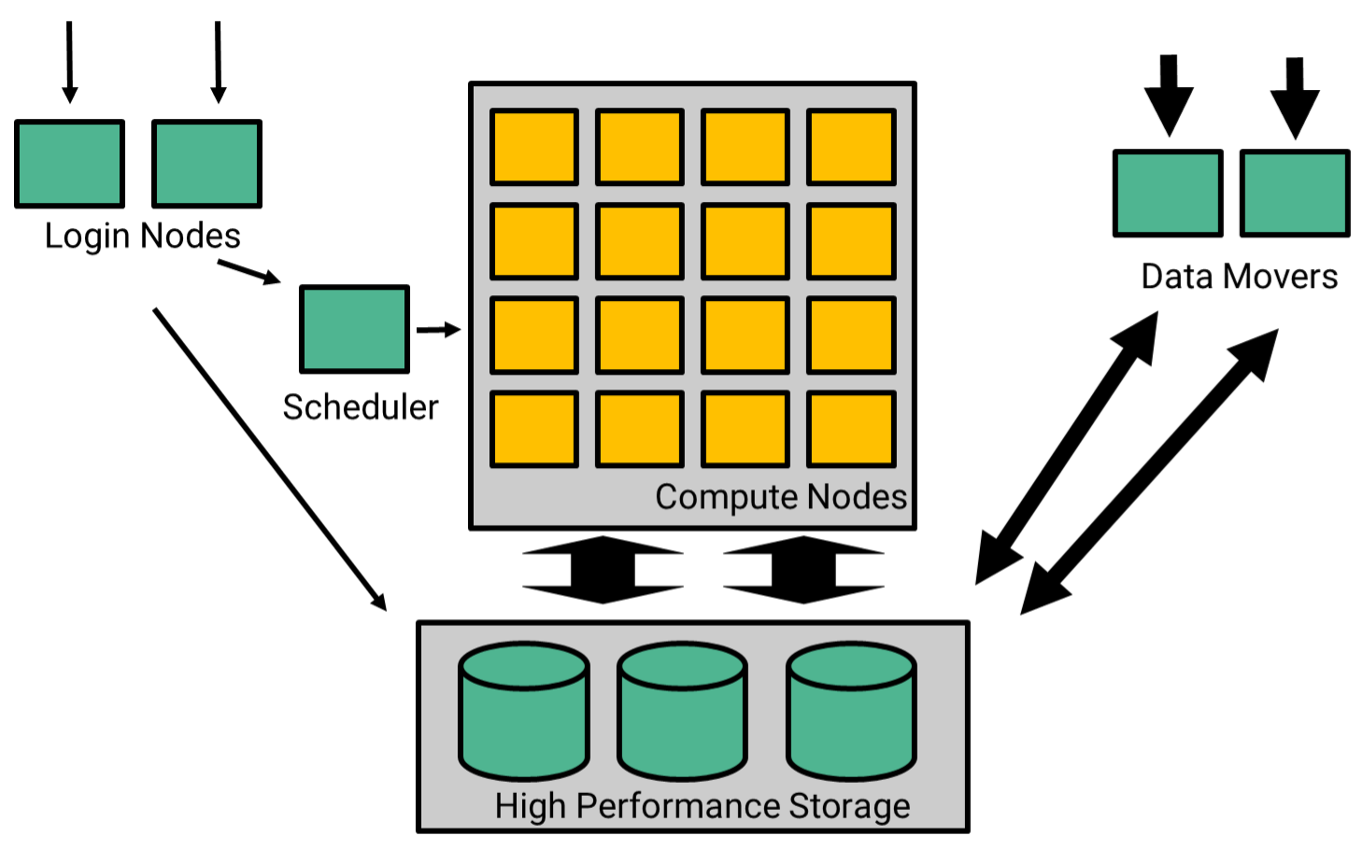

It’s important to understand the components of the supercomputer you’re using so that you have a general idea of how processes and data move between its components. As an example, the following is a simplified and generalised architecture of a supercomputer, courtesy of Pawsey (Pawsey Supercomputing Research Centre 2023).

It’s useful to understand how to access the compute nodes and storage nodes from the login node; how to move data to and from the storage system from your local computer; and how to use the job scheduling system, which is the next topic.

Job scheduling system (Slurm)

Slurm is the most widely used job scheduling system for HPC clusters, but there are other job schedulers used too. Familiarise yourself with them.

I found this to be a good guide to Slurm.

Computer architecture

The best way to understand how supercomputers work is to understand how computers work to begin with, including computer architecture and computer networks. Specifically, I would want to know how processors, memory, and storage components work.

I’ll write separate posts on these topics and link them here.

Networking concepts

Specifically, a basic understanding of how to use SSH for secure remote access and data transfer.

For file transfer tools specifically, I found it useful to understand how to use tools such as scp, sftp, or rsync for transferring data into and out of the HPC system.

Good starting points

If this is overwhelming: I get it! I only compiled this list after having spent over a month–every day–reading up and watching videos on how to use supercomputers. Here are some other places you could get good information from:

- Go to your supercomputing centre’s help page and see if they have user documentation that you can use and a support team you can reach out to. Pawsey, the supercomputing research centre I’m with, has an excellent and comprehensive user support documentation, a Supercomputing User Training playlist, and a responsive support team who helps us optimise our workflow. At one point I just needed to talk to someone and ask for help, so it was really good to have access to the support team.

- Go to other supercomputing centres’ help pages and look at their user documentations and videos. A few of my favourites:

HPC2N (Sweden) have really good written guides. Some of my favourites are their Beginner’s intro to clusters and their explanation of Slurm.

SciNet HPC at the University of Toronto has excellent YouTube videos. My favourite is their Intro to Advanced Research Computing.

- Talk to someone!

I think it’s really helpful to talk to someone who’s used supercomputers before. That way you can: 1) have a general idea of what you need to do, tailored to your workflow; and 2) get an idea of what topic you need to read up on next.

Alternatively, feel free to drop me a line and I will do my best to help!